In Conversation with Nishant Shah

H&D: When can we say that something is true? Or that we understand something?

Nishant Shah: This is a very provocative question. You start by asking: when can we say that something is true? It's the oldest philosophical question there is. There are so many different approaches to it. If you go back all the way to George Berkley who talked about the tree falling in a lonely forest, he states that if there is nobody there to watch it then it didn't really fall. And to Lawrence Lessig, who playfully says that if a tree falls in a lonely forest and there is nobody to tweet it then it didn't really fall. So what do we do with the notion of truth? I thought it might be helpful to not talk about if something is true or not. That would presume that truth is intrinsic. What you really want to think about is that our social understanding of truth is actually an extensive one, not an intensive one. It's all about measurements. Look at any discipline that relies on truth telling. Eventually, it boils down to the question: how can we measure, given the instruments that we have, whether something is truthful or not? That is the best path we can go down. If we look at a quote by Locke and at the history of how evidence has been produced in court, it begins by talking about the idea of a witness. Somebody has to be able to see it. And somebody else has to be able to decide whether the person who has seen it was capable of seeing what they said they have seen. It's almost like a blockchain trust mechanism. You need multiple people to physically vouch for your capacity and integrity to tell the truth. Which is how the jury system comes into being. Witnessing has always been about measurement and the capacity to measure. The more modern technology proliferated, the more new measures you have. Right now, for example, I'm very fascinated with these genetic measurement tests that people do to learn the ‘real truth’ about themselves. There is an incredible video of a very blonde, white, Irish woman who gets her results which reveal that she is one fourth African. And she cries, kneels down and has this profound moment of revelation, and says "oh my god I would never have thought I was Black!". At this moment you want to smash your screen and say "no you are not!" Truth and measurement is what’s interesting. How do you verify the measurement of truth, as opposed to trying to figure out what is intrinsically true?

That's a question that comes up in the art world all the time when we talk about questions of authenticity. Especially in visual and plastic art. If somebody has a painting and claims it is a Leonardo da Vinci you don't look at this person and think "it’s true, it captures the essence and the spirit, and the joy and power of Leonardo da Vinci". You go back to measurements: you measure the pigmentation, brush strokes, colours, paper, light, and chemicals. That would be my proposition: that we don’t talk about when something is true, we talk about how we can measure truth. Truth is obviously not a human question, it is an entirely technological question. Your experience of something has absolutely no validity when it comes to truth; your experience of your whole self has no validity in terms of truth. If you look at the experience of Trans people in countries where Trans identities are not accepted, their bodily and personal truth is overwritten by the binary measurements and matrices which are weaponised to try and deny the person’s truth claims to their own gender and sexual identity.

It's suddenly more of a technical question, and that is why digital technologies become so important. What digital technologies have essentially been doing from the late 12th century until now is helping us to establish what we understand as the first principle of truth. Each discipline, each mode of inquiry (especially post enlightenment) has tried very hard to state that this is our first principle. All of them rely on representation as their basic aesthetic. Here is a person and here is their picture, how close can the picture come to representing the truth of the person? Can this be done accurately or not? Donna Haraway points out in the Cyborg Manifesto (1984) that the biggest interruption of digital technologies is the disruption of the first principle of truth telling. The new default for truth measurement within the digital is actually simulation, and not representation. It's very fascinating. Donna Haraway says something like: if you write a program to do mathematical calculations, and if you ask what one plus one is equal to and the program says three, that is in fact the truth of the program. The human in front of the program might say that it is not true, and that one plus one equals two, but as far as the program is concerned it was programmed to give the answer: one plus one equals three. The softwares and the rules and protocols are going to measure things differently. In the representation, the copy has to measure up to the original, whereas in simulation the original has to measure up to the copy. However your self is represented, you have to measure up to it. The minute you are told you are one fourth African you have to measure up to it. That might be one way of thinking through it.

H&D: What immediately comes to mind is the question of accountability. When you say the program is programmed, the human influence inherent in that program cannot then be denied. A person made the decision to write the program in a certain way, sometimes without considering the potential consequences.

Nishant Shah: Sometimes people do consider consequences and they still make this choice. Accountability becomes a very interesting phenomenon here. Accountability presumes responsibility. Responsibility is also a representational problem. It presumes there is a ‘real’, truthful, authentic self who at the end of the day has an authorial position. Representation requires authorship. Do you know the film Snow White and the Seven Dwarfs was banned in the US in the 1960s? They thought it promoted Communism by showing children hammers and sickles, the red flag, and dwarfs going to the mine to work. They banned the film! Walt Disney went to court and said "there can be no Reds (which referred to communists at the time) in Snow White unless we intend them to be". That's interesting. Disney is essentially saying that a film has authorship, and if the author intended it to be communist then it is. But if the author says that it's not, then it's not. That was such an innocent time when you could accept that one author gives the meaning to its work and that is the only valid meaning. The court accepted it! We are now in a postmodern limbo, and we have to accept that the author is not only dead but didn't really exist to begin with. The author never lived. There is a new form of distributed authorship, which makes both responsibility and accountability very difficult.

I have been spending all of my free time in the last two months on Tik Tok. If any of you download Tik Tok you will be spending the next three months on it, being sucked into the rabbit hole. Tik Tok is a Chinese content delivery app. It consists of 30 second videos, and it looks a bit like Snapchat. It has extensive filters for audio, sound and visual manipulation. Tik Tok is really interesting because it is the first social media company that does not have its origin in content production, but in artificial intelligence development. On Tik Tok you don't have to know anybody, you don't have to like anybody, you don't have to follow anybody, you don't even need to identify yourself in any particular way, and it gives you millions of hours of unlimited video programing that is curated by AI somewhere. There is no human curation involved. Imagine how smart that AI is and the amount of granular data capture it must be doing. Because, of course, you sign in with a Facebook or Google account, so it presumes something about who you are as a data subject. After six minutes of working with it, it processes the videos that I watch for long enough and it starts showing me videos I simply can't stop watching. It was better than anything Spotify has ever done. There was not a single video after those first six minutes that I would have wanted to swipe left (which means not wanting to watch them). Of course it has all the hooks. It's very short so even if it's slightly boring you will still want to see what happens! The more I use it the more sophisticated it becomes. It's better than my boyfriend at judging what I'm going to like—and he has known me for eight years! I have been playing around with Tik Tok with two students to see when we find videos we really don't like. It's very scary that we haven't come across any yet. Tik Tok often presents very controversial materials to one of the students who has a very constructed social media profile. I've been looking into Tik Tok's preferences. There is nobody you can hold accountable for what you are shown. There is simply no human curation. There is merely an algorithm that responds to everything that you do. So that brings us to the question: what do you do in a world where authorship is no longer presumed as human?

This is where the work with Arthur Steiner and Leonardo Dellanoce {who were speakers at The Universe of ( ) Images Symposium) actually begins. Within visual cultures especially we have always presumed that there must be a human behind the content. Throughout history we have always returned to human agency. From a humanist and social background we keep on fighting for human agency. How can we take control? It remains in some elements of truth telling, but the new principles of truth telling intrinsically do not presume either human perception or human agency. My friend Mercedes Bunz—who wrote a book called The Silent Revolution: How Digitalization Transforms Knowledge, Work, Journalism and Politics without Making Too Much Noise (2014)— writes about how this is true in journalism in particular, where 40% of data in journalism is now authored by non-human subjects, and is also consumed by non-human subjects. A lot of information that we produce online never reaches human consumption because we are saturated with information. There are Google's spiders, the chat indexes, and the archival protocols of the internet. These different kinds of big data services are continuously crunching the information we are using. It is alarming but also fascinating, and it means that textual, narrative information which we have always passed from human to human is now being authored and consumed by non-human actors … so what do we do with accountability?

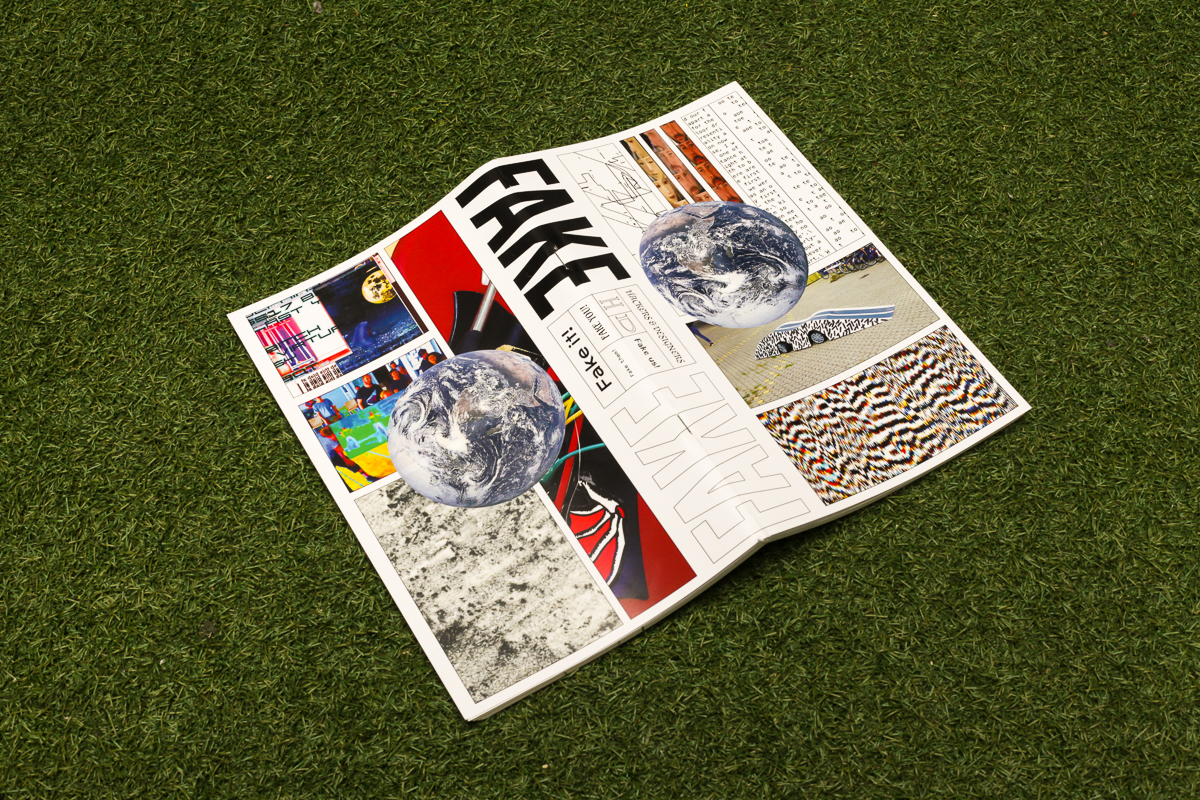

Published in Fake it! Fake them! Fake you! Fake us! Publication in 2019