Training and the problem of data: Difference between revisions

No edit summary |

No edit summary |

||

| (12 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

===What would it take to adopt a fugitive statistics?=== | ===What would it take to adopt a fugitive statistics?=== | ||

Lecture by [[Ramon Amaro]] during the Hackers & Designers Summer Talks 2015 | |||

I’d like to start with short descriptions of a few projects. The first is a conceptual work by artists Keith and Mendi Obadike called ‘Interaction of Coloreds'. Secondly, I’d like to discuss a research project conducted by Dr. Latanya Sweeney on the social implications of Google Adsense. | |||

My hope is that this discussion will take us beyond our current understandings about data practices, user experience, user interaction or the coding environment, and instead intervene somewhere in between these spaces, where what I will later discuss are often unseen, yet explicitly clear violences that reveal themselves in data-dependent web and design practices. I use the word ‘violence’ here intentionally. It’s a strong word that few would associate with a matrix of data or with design and coding applications. After all it’s just code. It’s just data.… But, I believe violence is a necessary description of the possible residual effects of practices that do not carefully consider the cultural circumstances that inform the data we use as input for training purposes; or how interactions with our digital applications might be infused with cultural biases, even when these technologies (or the designers themselves) are not aware of the cultural impacts of the products they provide. | |||

My concern here is then two-fold: how can theories behind the maths — and the digital practices that they power — inform certain rationales concerning the development of an ideal agency? What I mean is, how did the maths that inform our present coding languages, algorithm designs and other design practices come about, and through which logics? And, is there a conflict between how the maths were derived and how we apply them to today’s digital environments? | |||

Consider the genealogy of statistical regression or Bayesian probabilities, both of which are foundations of deep mining and machine learning design. There’s no time to go into the specifics here, but generally, linear regression came about through Poisson’s experimentations with jury decisions, which lead to his important work Law of Large Numbers (la loi des grands nombres) in 1837, a fundamental baseline for most quantitative research conducted today. In short, Law of Large Numbers applies statistical tests to deduce rare events, like jury decisions, into probabilities of certain outcomes, making it possible to incorporate variability into generalisations of otherwise chaotic systems. Nonetheless, Poisson viewed probabilities as degrees of belief, or judgements by rational individuals. | |||

Now, we can debate ideas of what a rational individual is or should be, and perhaps even use that discussion to think about why we are so eager to build rational machines without a clear definition of what this means in the first place, but that’s for a later time. For now, I want to stress that early research into large group behaviour was viewed through contingencies or likelihoods, not certainties. It was not until likelihoods were put into use as mechanisms of prediction that large group behaviour, and subsequently control of these behaviours, turned into rationales of objectivity by mathematics that were very good — or bad depending on how you look at it — at stating what might occur when living beings exhibit certain characteristics associated with certain phenomenon. I hope at this point you can see how this logic has transferred into modern digital product development, where the rational machine is sufficient to define social qualities as well as reinforce its own role in knowing the unknowable. | |||

Of course, these provocations illicit certain reactions in some design and research spaces, particularly those in deep engagement with quantitative study, statistical analysis or a sort of statistical coding, if you will, in practices like machine learning. After all, there are extensive genealogies of mathematical proofs that solidify our confidences in statistics, and extensive research that has placed the experiences of the user at the fore of complex mathematical and coding processes. | |||

However, as cultural theorist Oscar H. Gandy, Jr. states, rational decision making plays a key role in the distribution of life chances.''I'' I’m hoping this will become more clear later on when I talk about about specific cases, starting with an experiment in digital practice by Keith + Mendi Obadike. In August 2002, artists Keith + Mendi Obadike launched ‘The Interaction of Coloreds’ (Figure 1.0), commissioned by the Whitney Museum of American Art. The Interaction of Coloreds is a conceptual work for audio and the Internet. Through The Interaction of Coloreds, the artists ask how social filters, what Keith + Mendi Obadike define as 'systems by which people accept or reject members of a community', are in conversation with skin colour.''II'' The artists draw on designer Josef Albers's Interaction of Color, a design text which features ten representative color studies chosen by Albers. Albers elaborates on the displacement of colour selection in the subjective by stating that our preference for certain colours is governed by an internal process. Re-conceptualising Alber's claim, Keith + Mendi Obadike explicitly note that practices of administering so-called ‘color tests’ originated on slave plantations as a basis for social selection. Brown paper bags were used to classify which slaves were suitable for certain work based on skin colour. Those with skin darker than the bag were sent to the fields. Those with lighter skin were placed indoors. The artists conceive that the practice of administering associations of skin colour with social production remains in conversation today in processes of employment seeking, university applications, consumer profiling, policing, security, transportation and economics. | |||

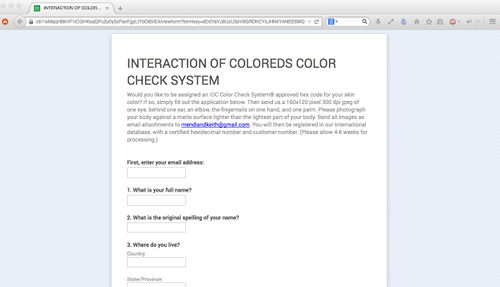

By asking The Interaction of Coloreds participants to apply online for a hexadecimal code to identify their 'Websafe' skin colour, Keith + Mendi Obadike contend that colour can be defined more 'accurately using current race-based language’. The work serves as a marker by which participants can navigate the abstractions of social access, while making explicit the coding of skin color as a counter performance of blackness into the digital. As Christopher McGahan writes: '"Mongrel" forms of knowledge making are introduced into technologies of data gathering and organization where these are normally excluded and marginalized, allowing for a preliminary evaluation, from both a political and ethical vantage, of the technical and social functions of such technologies in practice'.''III'' In this way Obadike's work is a reflection of the extensions of technologies into the racialisation of the body, not from simply conjoining the issues of race with technology to unravel the centrality of data processing on racial subjugation, but by displacing myths of race as either purely biological or purely cultural.''IV'' | |||

The conversations posed by Keith + Mendi Obadike bring into question the territorialisation of the subjective by means of social filter and the place of the digital within racialising practices. Through interaction with the code itself, it is within reason that the production of subjective categorisation is placed in reference to its explicit uses as value in a race-imbued society. The project's detailed application form (Figure 2), including phenotypic descriptions and family histories, amplifies the field of interactions between technologies of both the human and machine, where the algorithm itself, inclusive of its mathematical hypothesis, becomes a mediator between the acceptability of forced placement and subsequent restriction of movement in social space and time. Hui Kyong Chun stresses that the convergence of race and technology 'shifts the focus from the what of race to the how of race, from knowing race and doing race to emphasizing the similarities between race and technology.’''V'' Hui Kyong Chun notes further that considerations of race as technology reduces the complexity of a fruitful body of research investigating race as a scientific category in favour of a race as practice that facilitates comparisons between entities classed as similar and dissimilar, in other words taxonomy. | |||

This experiment in culture, or art (what is art if not an experiment in possibilities?), illuminate the role of digital design practices in the manoeuvrability of certain people. However, these experiments are limited to the speculative space. They have applicable consequences that have already foreclosed on the life chances of many individuals. Studies have shown that commonly used self-learning machines can produce results that reveal racist and other discriminatory practices, even if the algorithms are not designed with these intentions.''VI'' In other words, machines may not yet comprehend the dynamics of racism, but they can be racist. For instance, in 2013 Dr. Latanya Sweeney, Professor of Government and Technology in Residence and Director of the Data Privacy Lab at Harvard University. In her study, Sweeney found that online ads suggesting arrest records appeared more often in searches for ‘black-sounding’ names than in searches for ‘white-sounding’ names. The obscurity of the measureables, ‘black-sounding’ and ‘white-sounding’, only illuminate the importance of Sweeney’s research question: Can racial groups be adversely affected by a digital application assumed to be egalitarian? Sweeney empirically questions suspected patterns of ad delivery in real-world searches. The study began with the assumption that personalized ads in Google Search suggestive of arrest records did not differ by race. This was accomplished by constructing the scientifically best instance of the pattern, or one with names shown to be racially identifying and pseudo-randomly selected. This was correlated with a proxy for race associated with racialised names, including hand picked names, and filtered through Google Images. What Sweeney found was ads displayed by Google Adsense suggesting arrest tended to appear with names associated with blacks, and neutral ads or no ads appeared with names associated with whites, regardless of whether that person had been previously arrested. The findings question the place of bias in realtime learning systems and how knowledge exchange operates despite the potential causes of discrimination. | |||

[[File:figure1.0.jpg|500px|Figure 1.0 - The Interaction of Coloreds (2002). Source: http://www.blacknetart.com/IOCccs.html]] | |||

[[File:figure1.0.jpg|500px|Figure 1.0]] | |||

Although Sweeney’s experiment does not point towards explicit intentionality by Google as the mediator of data exchange, much as in Foucault’s analysis of Borges animal taxonomy, it recognises the implicit nature of biases and suspicions of digital profiles that play themselves out at the point of user engagement. Therefore, as Sweeney asks, how can designer or coders think through societal consequences such as structural racism in technology design? And how can technologies be built to instead form new techno-political experiences for classified users? | Although Sweeney’s experiment does not point towards explicit intentionality by Google as the mediator of data exchange, much as in Foucault’s analysis of Borges animal taxonomy, it recognises the implicit nature of biases and suspicions of digital profiles that play themselves out at the point of user engagement. Therefore, as Sweeney asks, how can designer or coders think through societal consequences such as structural racism in technology design? And how can technologies be built to instead form new techno-political experiences for classified users? | ||

The difficulty here is that most generalisation patterns seek to improve performance without altering existing knowledge frameworks.''VII'' For knowledge-based learning researchers, knowledge is seen as ‘a succinct and efficient means of describing and predicting the behavior of a computer system’.''VIII'' From within this context, advocates argue | |||

[[File:figure2.0.jpg|500px|Figure 2.0 - The Interaction of Coloreds (2002). Source: http://www.blacknetart.com/IOCccs.html]] | |||

that knowledge should not be suppressed, and that it is important to introduce levels of description, such as previous knowledge, specification, design, verification, prediction, explanation, and so on, depending on the assigned task, even through every level of description is known to be incomplete.''IX'' Otherwise, the complete details of a system are suppressed and held in partiality by every level in order to focus on certain aspects of the systems deemed most important. Hence, the system at the level of inquiry are assumed approximations, incapable of making certain predictions since the details necessary to make complete assessments are missing. | |||

Sweeney’s results are not alone in their implications. Other studies indicate that pricing and other automated digital commerce systems display higher prices in geographical areas known to have large concentrations of black and minority individuals. Analysis of Google Maps indicated that searches for racist expressions, such as the ’n-word’, directed users to Pres. Barack Obama’s residence at The White House. More recently, it was discovered that photo service Flikr’s automated tagging service had mislabeled a black woman under the category of ‘monkeys’, a racial stereotype often associated with black people. | |||

Although these results are striking, there is little data to indicate how the machines determined these outcomes. To what degree are self-learning machines influenced by human factors, like racial profiling? To what extent do machines learn to not just replicate social discriminations, but make decisions based on race-based factors? As machines become more sophisticated at relying on their own rationales to make decisions, will they one day decide on their own to preference some individuals and populations over others? And, if so, what value systems will they place on the outcomes? Although these questions are of immediate importance, current research lacks sufficient insight into these concerns. | |||

One reason we fail to fully comprehend these dynamics is that current research does not extend far enough to reveal the full scope of how machines use data to learn and interpret their environments. Neither do they fully consider the types of data that are used to train the learning process. Further, most policy research on the future of self-learning environments speculate on the species level impact of machine automation, where questions of human extinction mute more immediate concerns about the potential for local level violences and discriminations.''X'' For instance current research on self-driving vehicles evaluate machine performance based on autonomous decisions about the safety of drivers and pedestrians. In dynamic (or real world) environments, these decisions might force the preference of one life over another to avoid an accident or in case of emergency. However, If machines have capacity to place value on certain lives over others, is it plausible that they could also use specific cultural input data to determine which lives are preferenced based on factors other than personal safety. The same claims can be extended to automated weaponry and self-learning drones in the contexts of security. Considerations of these ethical factors are not to lessen the fact that our understandings of autonomous decision making are still in their infancy, which increases the importance of addressing these concerns today. | |||

To conclude, I question how we might approach data from an alternative view that assumes any information mined in human environments already contain pre-existing cultural biases that can contaminate the machine learning process. As machines gain greater ability to automate and self-train, we cannot ignore the fact that machines, despite their levels of sophistication, are limited by the sensory perceptions of their environments. At present these environments are infused with data that articulate ecologies of biases that substantiate networks of power and inequality. To answer this question would involve a return to the human relationship with the machine. It is not meant to advocate for a divorce from technology, nor suggest that mathematics is to blame for human conditions. Instead, a new data humanism advocates for development that drives experimentation with the unknown. The provocation requires a shift in focus from experimentation as means of understanding and ordering of social processes to one which is more fugitive and leaves the artefact in place to drive new possibilities of the social. This humanism argues that only in this way can the deviances and deficits of datafied relations in terms of racism, classism, sexism, homophobia and general social disinterest be redefined as the authentic way of being. If not, we risk leaving these deviations behind, in the name of innovation and in the name of searches for the perfect human and the perfect solution, by means of the perfect machine. | |||

'''Notes''' | '''Notes''' | ||

*I See Oscar H. Gandy, Jr., Coming to Terms with Chance: Engaging Rational Discrimination and Cumulative Disadvantage. | |||

I See Oscar H. Gandy, Jr., Coming to Terms with Chance: Engaging Rational Discrimination and Cumulative Disadvantage. | *II Keith + Mendi Obadike, “The Black.Net.Art Actions: Blackness For Sale (2001), The Interaction of Coloreds (2002), And The Pink Of Stealth (2003)” in Re:Skin edited by Mary Flanagan, and Austin Booth. See pp. 245 - 250. | ||

*III Christopher L. McGahan, Racing Cyberculture: Minoritarian Art and Cultural Politics on the Internet. See pp. 85 - 122. | |||

II Keith + Mendi Obadike, “The Black.Net.Art Actions: Blackness For Sale (2001), The Interaction of Coloreds (2002), And The Pink Of Stealth (2003)” in Re:Skin edited by Mary Flanagan, and Austin Booth. See pp. 245 - 250. | *IV For an accessible account of race and technology, see Wendy Hui Kyong Chun, Programmed Visions: Software and Memory. | ||

*V Wendy Hui Kyong Chun, Programmed Visions: Software and Memory. See pp. 38. | |||

III Christopher L. McGahan, Racing Cyberculture: Minoritarian Art and Cultural Politics on the Internet. See pp. 85 - 122. | *VI Rob Kitchin and Tukufu Zuberi give clear analysis of the vulnerabilities of data practices to discrimination and stereotype. See, The Data Revolution Big Data, Open Data, Data Infrastructures and Their Consequences and Thicker Than Blood: How Racial Statistics Lie, respectively. | ||

*VII Thomas G. Dietterich, “Learning at the Knowledge Level,” in Machine Learning, vol . See pp 287-316. | |||

IV For an accessible account of race and technology, see Wendy Hui Kyong Chun, Programmed Visions: Software and Memory. | *VIII Allen Newell, “The knowledge level.” in AI Magazine, vol 2. See pp. 1-20. | ||

*IX Thomas G. Dietterich, “Learning at the Knowledge Level,” in Machine Learning, vol . See pp 287-316. | |||

V Wendy Hui Kyong Chun, Programmed Visions: Software and Memory. See pp. 38. | *X The Future of Life Institute describes itself as a ‘volunteer-run research and outreach organization working to mitigate existential risks facing humanity.’ The institute deals with issues of artificial intelligence and human interaction. See http://futureoflife.org/ai-news/ | ||

VI Rob Kitchin and Tukufu Zuberi give clear analysis of the vulnerabilities of data practices to discrimination and stereotype. See, The Data Revolution Big Data, Open Data, Data Infrastructures and Their Consequences and Thicker Than Blood: How Racial Statistics Lie, respectively. | |||

VII Thomas G. Dietterich, “Learning at the Knowledge Level,” in Machine Learning, vol . See pp 287-316. | |||

VIII Allen Newell, “The knowledge level.” in AI Magazine, vol 2. See pp. 1-20. | |||

IX Thomas G. Dietterich, “Learning at the Knowledge Level,” in Machine Learning, vol . See pp 287-316. | |||

X The Future of Life Institute describes itself as a ‘volunteer-run research and outreach organization working to mitigate existential risks facing humanity.’ | |||

Latest revision as of 16:45, 16 December 2015

What would it take to adopt a fugitive statistics?

Lecture by Ramon Amaro during the Hackers & Designers Summer Talks 2015

I’d like to start with short descriptions of a few projects. The first is a conceptual work by artists Keith and Mendi Obadike called ‘Interaction of Coloreds'. Secondly, I’d like to discuss a research project conducted by Dr. Latanya Sweeney on the social implications of Google Adsense.

My hope is that this discussion will take us beyond our current understandings about data practices, user experience, user interaction or the coding environment, and instead intervene somewhere in between these spaces, where what I will later discuss are often unseen, yet explicitly clear violences that reveal themselves in data-dependent web and design practices. I use the word ‘violence’ here intentionally. It’s a strong word that few would associate with a matrix of data or with design and coding applications. After all it’s just code. It’s just data.… But, I believe violence is a necessary description of the possible residual effects of practices that do not carefully consider the cultural circumstances that inform the data we use as input for training purposes; or how interactions with our digital applications might be infused with cultural biases, even when these technologies (or the designers themselves) are not aware of the cultural impacts of the products they provide.

My concern here is then two-fold: how can theories behind the maths — and the digital practices that they power — inform certain rationales concerning the development of an ideal agency? What I mean is, how did the maths that inform our present coding languages, algorithm designs and other design practices come about, and through which logics? And, is there a conflict between how the maths were derived and how we apply them to today’s digital environments? Consider the genealogy of statistical regression or Bayesian probabilities, both of which are foundations of deep mining and machine learning design. There’s no time to go into the specifics here, but generally, linear regression came about through Poisson’s experimentations with jury decisions, which lead to his important work Law of Large Numbers (la loi des grands nombres) in 1837, a fundamental baseline for most quantitative research conducted today. In short, Law of Large Numbers applies statistical tests to deduce rare events, like jury decisions, into probabilities of certain outcomes, making it possible to incorporate variability into generalisations of otherwise chaotic systems. Nonetheless, Poisson viewed probabilities as degrees of belief, or judgements by rational individuals.

Now, we can debate ideas of what a rational individual is or should be, and perhaps even use that discussion to think about why we are so eager to build rational machines without a clear definition of what this means in the first place, but that’s for a later time. For now, I want to stress that early research into large group behaviour was viewed through contingencies or likelihoods, not certainties. It was not until likelihoods were put into use as mechanisms of prediction that large group behaviour, and subsequently control of these behaviours, turned into rationales of objectivity by mathematics that were very good — or bad depending on how you look at it — at stating what might occur when living beings exhibit certain characteristics associated with certain phenomenon. I hope at this point you can see how this logic has transferred into modern digital product development, where the rational machine is sufficient to define social qualities as well as reinforce its own role in knowing the unknowable.

Of course, these provocations illicit certain reactions in some design and research spaces, particularly those in deep engagement with quantitative study, statistical analysis or a sort of statistical coding, if you will, in practices like machine learning. After all, there are extensive genealogies of mathematical proofs that solidify our confidences in statistics, and extensive research that has placed the experiences of the user at the fore of complex mathematical and coding processes.

However, as cultural theorist Oscar H. Gandy, Jr. states, rational decision making plays a key role in the distribution of life chances.I I’m hoping this will become more clear later on when I talk about about specific cases, starting with an experiment in digital practice by Keith + Mendi Obadike. In August 2002, artists Keith + Mendi Obadike launched ‘The Interaction of Coloreds’ (Figure 1.0), commissioned by the Whitney Museum of American Art. The Interaction of Coloreds is a conceptual work for audio and the Internet. Through The Interaction of Coloreds, the artists ask how social filters, what Keith + Mendi Obadike define as 'systems by which people accept or reject members of a community', are in conversation with skin colour.II The artists draw on designer Josef Albers's Interaction of Color, a design text which features ten representative color studies chosen by Albers. Albers elaborates on the displacement of colour selection in the subjective by stating that our preference for certain colours is governed by an internal process. Re-conceptualising Alber's claim, Keith + Mendi Obadike explicitly note that practices of administering so-called ‘color tests’ originated on slave plantations as a basis for social selection. Brown paper bags were used to classify which slaves were suitable for certain work based on skin colour. Those with skin darker than the bag were sent to the fields. Those with lighter skin were placed indoors. The artists conceive that the practice of administering associations of skin colour with social production remains in conversation today in processes of employment seeking, university applications, consumer profiling, policing, security, transportation and economics.

By asking The Interaction of Coloreds participants to apply online for a hexadecimal code to identify their 'Websafe' skin colour, Keith + Mendi Obadike contend that colour can be defined more 'accurately using current race-based language’. The work serves as a marker by which participants can navigate the abstractions of social access, while making explicit the coding of skin color as a counter performance of blackness into the digital. As Christopher McGahan writes: '"Mongrel" forms of knowledge making are introduced into technologies of data gathering and organization where these are normally excluded and marginalized, allowing for a preliminary evaluation, from both a political and ethical vantage, of the technical and social functions of such technologies in practice'.III In this way Obadike's work is a reflection of the extensions of technologies into the racialisation of the body, not from simply conjoining the issues of race with technology to unravel the centrality of data processing on racial subjugation, but by displacing myths of race as either purely biological or purely cultural.IV

The conversations posed by Keith + Mendi Obadike bring into question the territorialisation of the subjective by means of social filter and the place of the digital within racialising practices. Through interaction with the code itself, it is within reason that the production of subjective categorisation is placed in reference to its explicit uses as value in a race-imbued society. The project's detailed application form (Figure 2), including phenotypic descriptions and family histories, amplifies the field of interactions between technologies of both the human and machine, where the algorithm itself, inclusive of its mathematical hypothesis, becomes a mediator between the acceptability of forced placement and subsequent restriction of movement in social space and time. Hui Kyong Chun stresses that the convergence of race and technology 'shifts the focus from the what of race to the how of race, from knowing race and doing race to emphasizing the similarities between race and technology.’V Hui Kyong Chun notes further that considerations of race as technology reduces the complexity of a fruitful body of research investigating race as a scientific category in favour of a race as practice that facilitates comparisons between entities classed as similar and dissimilar, in other words taxonomy.

This experiment in culture, or art (what is art if not an experiment in possibilities?), illuminate the role of digital design practices in the manoeuvrability of certain people. However, these experiments are limited to the speculative space. They have applicable consequences that have already foreclosed on the life chances of many individuals. Studies have shown that commonly used self-learning machines can produce results that reveal racist and other discriminatory practices, even if the algorithms are not designed with these intentions.VI In other words, machines may not yet comprehend the dynamics of racism, but they can be racist. For instance, in 2013 Dr. Latanya Sweeney, Professor of Government and Technology in Residence and Director of the Data Privacy Lab at Harvard University. In her study, Sweeney found that online ads suggesting arrest records appeared more often in searches for ‘black-sounding’ names than in searches for ‘white-sounding’ names. The obscurity of the measureables, ‘black-sounding’ and ‘white-sounding’, only illuminate the importance of Sweeney’s research question: Can racial groups be adversely affected by a digital application assumed to be egalitarian? Sweeney empirically questions suspected patterns of ad delivery in real-world searches. The study began with the assumption that personalized ads in Google Search suggestive of arrest records did not differ by race. This was accomplished by constructing the scientifically best instance of the pattern, or one with names shown to be racially identifying and pseudo-randomly selected. This was correlated with a proxy for race associated with racialised names, including hand picked names, and filtered through Google Images. What Sweeney found was ads displayed by Google Adsense suggesting arrest tended to appear with names associated with blacks, and neutral ads or no ads appeared with names associated with whites, regardless of whether that person had been previously arrested. The findings question the place of bias in realtime learning systems and how knowledge exchange operates despite the potential causes of discrimination.

Although Sweeney’s experiment does not point towards explicit intentionality by Google as the mediator of data exchange, much as in Foucault’s analysis of Borges animal taxonomy, it recognises the implicit nature of biases and suspicions of digital profiles that play themselves out at the point of user engagement. Therefore, as Sweeney asks, how can designer or coders think through societal consequences such as structural racism in technology design? And how can technologies be built to instead form new techno-political experiences for classified users?

The difficulty here is that most generalisation patterns seek to improve performance without altering existing knowledge frameworks.VII For knowledge-based learning researchers, knowledge is seen as ‘a succinct and efficient means of describing and predicting the behavior of a computer system’.VIII From within this context, advocates argue

that knowledge should not be suppressed, and that it is important to introduce levels of description, such as previous knowledge, specification, design, verification, prediction, explanation, and so on, depending on the assigned task, even through every level of description is known to be incomplete.IX Otherwise, the complete details of a system are suppressed and held in partiality by every level in order to focus on certain aspects of the systems deemed most important. Hence, the system at the level of inquiry are assumed approximations, incapable of making certain predictions since the details necessary to make complete assessments are missing.

Sweeney’s results are not alone in their implications. Other studies indicate that pricing and other automated digital commerce systems display higher prices in geographical areas known to have large concentrations of black and minority individuals. Analysis of Google Maps indicated that searches for racist expressions, such as the ’n-word’, directed users to Pres. Barack Obama’s residence at The White House. More recently, it was discovered that photo service Flikr’s automated tagging service had mislabeled a black woman under the category of ‘monkeys’, a racial stereotype often associated with black people.

Although these results are striking, there is little data to indicate how the machines determined these outcomes. To what degree are self-learning machines influenced by human factors, like racial profiling? To what extent do machines learn to not just replicate social discriminations, but make decisions based on race-based factors? As machines become more sophisticated at relying on their own rationales to make decisions, will they one day decide on their own to preference some individuals and populations over others? And, if so, what value systems will they place on the outcomes? Although these questions are of immediate importance, current research lacks sufficient insight into these concerns.

One reason we fail to fully comprehend these dynamics is that current research does not extend far enough to reveal the full scope of how machines use data to learn and interpret their environments. Neither do they fully consider the types of data that are used to train the learning process. Further, most policy research on the future of self-learning environments speculate on the species level impact of machine automation, where questions of human extinction mute more immediate concerns about the potential for local level violences and discriminations.X For instance current research on self-driving vehicles evaluate machine performance based on autonomous decisions about the safety of drivers and pedestrians. In dynamic (or real world) environments, these decisions might force the preference of one life over another to avoid an accident or in case of emergency. However, If machines have capacity to place value on certain lives over others, is it plausible that they could also use specific cultural input data to determine which lives are preferenced based on factors other than personal safety. The same claims can be extended to automated weaponry and self-learning drones in the contexts of security. Considerations of these ethical factors are not to lessen the fact that our understandings of autonomous decision making are still in their infancy, which increases the importance of addressing these concerns today.

To conclude, I question how we might approach data from an alternative view that assumes any information mined in human environments already contain pre-existing cultural biases that can contaminate the machine learning process. As machines gain greater ability to automate and self-train, we cannot ignore the fact that machines, despite their levels of sophistication, are limited by the sensory perceptions of their environments. At present these environments are infused with data that articulate ecologies of biases that substantiate networks of power and inequality. To answer this question would involve a return to the human relationship with the machine. It is not meant to advocate for a divorce from technology, nor suggest that mathematics is to blame for human conditions. Instead, a new data humanism advocates for development that drives experimentation with the unknown. The provocation requires a shift in focus from experimentation as means of understanding and ordering of social processes to one which is more fugitive and leaves the artefact in place to drive new possibilities of the social. This humanism argues that only in this way can the deviances and deficits of datafied relations in terms of racism, classism, sexism, homophobia and general social disinterest be redefined as the authentic way of being. If not, we risk leaving these deviations behind, in the name of innovation and in the name of searches for the perfect human and the perfect solution, by means of the perfect machine.

Notes

- I See Oscar H. Gandy, Jr., Coming to Terms with Chance: Engaging Rational Discrimination and Cumulative Disadvantage.

- II Keith + Mendi Obadike, “The Black.Net.Art Actions: Blackness For Sale (2001), The Interaction of Coloreds (2002), And The Pink Of Stealth (2003)” in Re:Skin edited by Mary Flanagan, and Austin Booth. See pp. 245 - 250.

- III Christopher L. McGahan, Racing Cyberculture: Minoritarian Art and Cultural Politics on the Internet. See pp. 85 - 122.

- IV For an accessible account of race and technology, see Wendy Hui Kyong Chun, Programmed Visions: Software and Memory.

- V Wendy Hui Kyong Chun, Programmed Visions: Software and Memory. See pp. 38.

- VI Rob Kitchin and Tukufu Zuberi give clear analysis of the vulnerabilities of data practices to discrimination and stereotype. See, The Data Revolution Big Data, Open Data, Data Infrastructures and Their Consequences and Thicker Than Blood: How Racial Statistics Lie, respectively.

- VII Thomas G. Dietterich, “Learning at the Knowledge Level,” in Machine Learning, vol . See pp 287-316.

- VIII Allen Newell, “The knowledge level.” in AI Magazine, vol 2. See pp. 1-20.

- IX Thomas G. Dietterich, “Learning at the Knowledge Level,” in Machine Learning, vol . See pp 287-316.

- X The Future of Life Institute describes itself as a ‘volunteer-run research and outreach organization working to mitigate existential risks facing humanity.’ The institute deals with issues of artificial intelligence and human interaction. See http://futureoflife.org/ai-news/